Hello Everyone!

Thank you for being patient with us on product updates for Experiment.

First, we want to let you know of an enhancement we’re adding this week to improve Goals and Takeaways!

Better Goals & Takeaways are arriving this week!

The ultimate goal for running experiments is to make iterations within your product that lead to a measurable improvement of a desired outcome. This causality relationship is critical to know whether the feature you shipped or the change you made impacted your desired results.

To do that, you need to

- Set a measurable goal that matters to you

- Run your experiment against this goal

- Know what to do with your results once the data reaches statistical significance

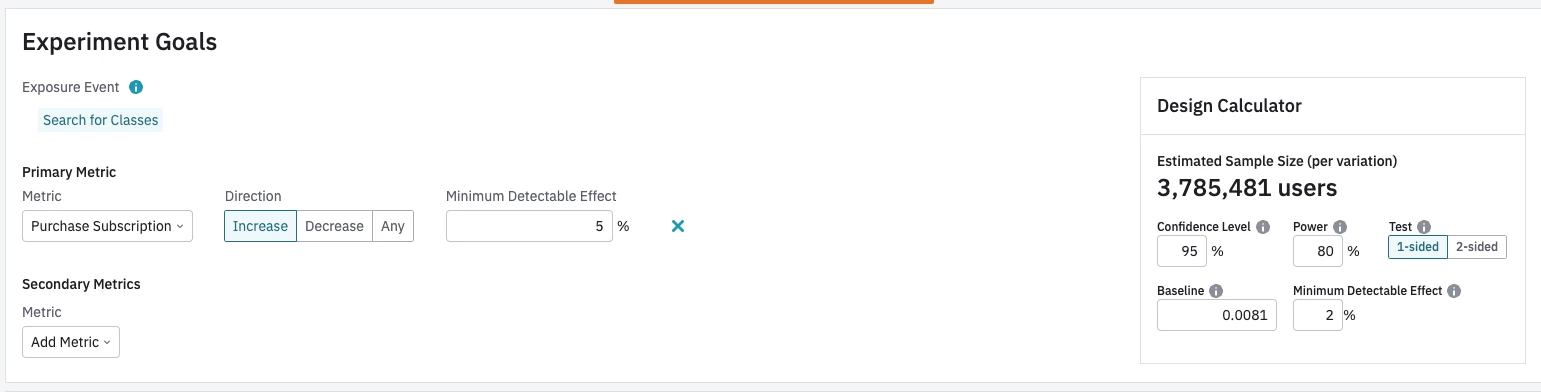

We’ve made some adjustment to the Experiment goal setting stage by adding a “Minimum Detectable Effect,” or goal, as a measurable metric you hope to obtain with this experiment. This metric might be something like “Increase subscription purchases by 5%.”

We then use the goal you’ve set, along with our statistical analysis of the experiment, to provide you with a recommendation on what you should do next as a new “Summary” card.

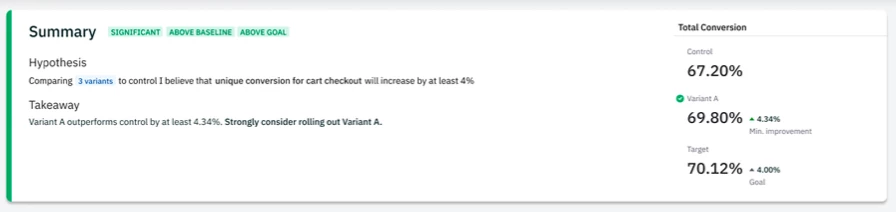

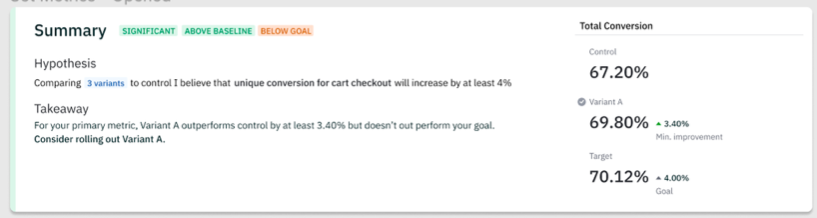

In a single glance, we’ll show you whether your experiment was statistically significant, above the baseline, and whether you reached your goal. We’ll restate your original hypothesis, provide our recommended next step, as well as a quick snapshot of how the control and variants did against your target.

The example below shows an experiment with statistically significant results but didn’t hit the desired goal. Now you can make a more informed decision on whether you should roll that feature out or make some minor adjustments to achieve your target goal.

We’ve had this information in the product before, but we’ve now made it a lot easier for you and your stakeholders to see everything they need quickly.

Other update reminders:

A couple of weeks ago, we sent an email out on some enhancements we’ve made to the product over the last few months. As a quick reminder, these included:

-

Improved Exposure Tracking: A simple and well-defined default exposure event for tracking a user’s exposure to a variant. Improves analysis accuracy and reliability by removing possibility of misattribution and race conditions caused by Assignment events. In order to take advantage of Improved Exposure Tracking, you’ll need to make changes to your Experiment implementation.

-

Deprecate ‘Off’ as the Default Variant Value: With the move to improved exposure tracking, we want to maintain user property consistency across the system. Therefore, we have changed the experiment evaluation servers to unset an experiment’s user property when the user is not bucketed a variant rather than setting the value to ‘off’.

-

Integrated SDKs: Client-side SDKs now support seamless integration between Amplitude Analytics and Experiment SDKs. Integrated SDKs simplify initialization, enable real-time user properties, and automatically track exposure events when a variant is served to the user.

-

Experiment Lifecycle: An all-new guided experience for experiments. Features are now organized by the way teams work, from planning and running an experiment to analyzing the results and making decisions. You’ll also notice a status bar that tracks key tasks in each stage and the duration of your experiment, along with suggestions on next steps to take.