Hi,

I’m trying to import events to amplitude from s3 following the steps mentioned here.

https://developers.amplitude.com/docs/amazon-s3-import-guide#optional-manage-event-notifications

I successfully created Amazon S3 import source and my data file(events.json) contains 1 event as below,

{ "action": "watch tv", "user": "sucheth13@gmail.com", "device": "host1", "event_properties": { "business_id": "123" }, "user_properties": { "utm_channel_c": "discovery", "utm_channel_s": "network" }, "epoch": "1645066434189", "session_id": "1", "app_version": "1"}

My converter config :

{ "converterConfig": { "fileType": "json", "compressionType": "none", "convertToAmplitudeFunc": { "event_type": "action", "user_id": "user", "device_id": "device", "event_properties": { "business_id_encid": "business_id" }, "user_properties": { "utm_channel_category": "utm_channel_c", "utm_channel_source": "utm_channel_s" }, "time": "epoch", "session_id": "session_id", "app_version": "app_version" } }, "keyValidatorConfig": { "filterPattern": ".*\\.json" }}So according to the documentation,

event_type should be mapped on to action, user_id should be mapped on to user. Etc from my ingested data file.

However that is not the case, I cannot see this event on amplitude.

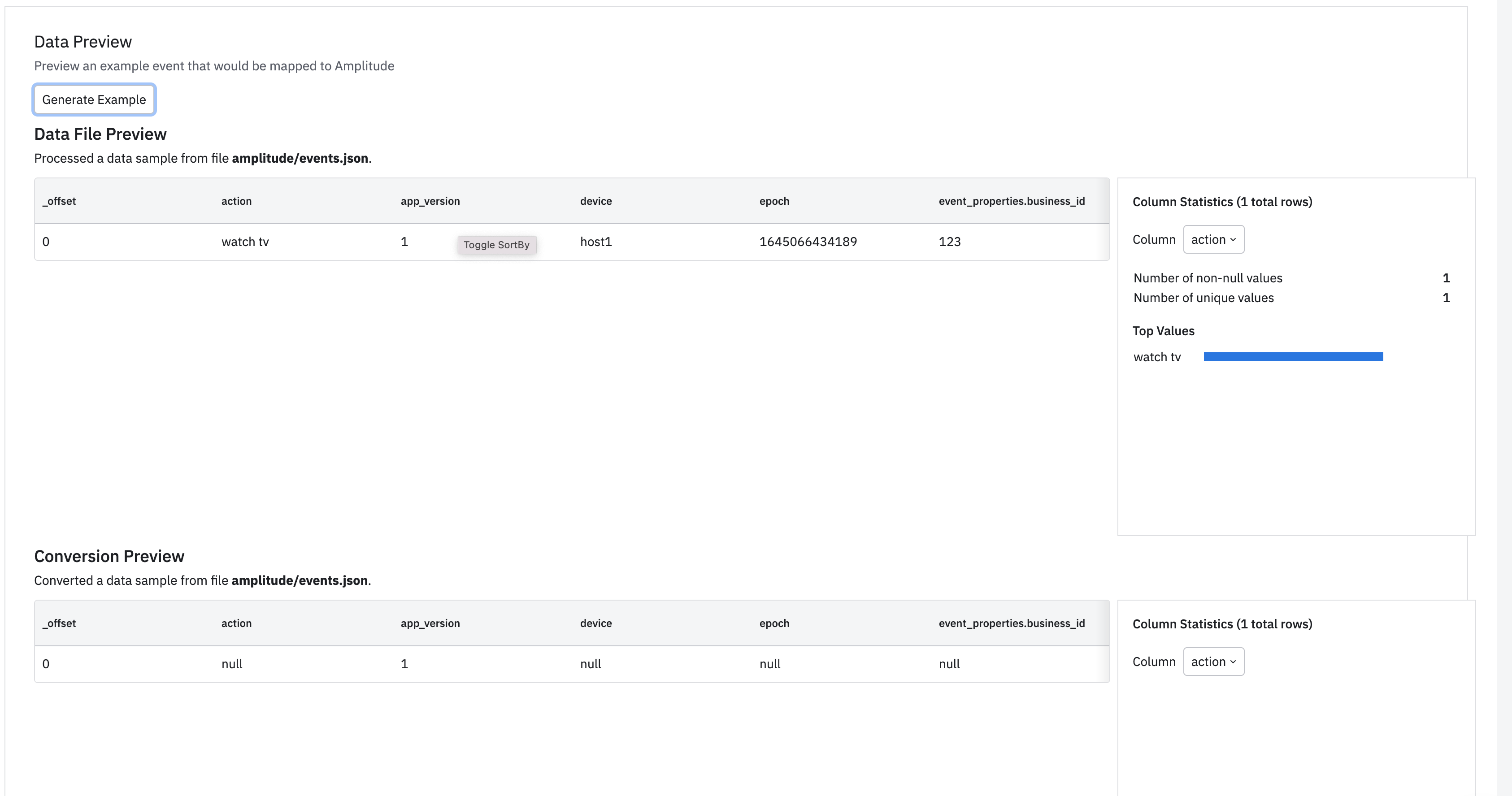

Also In the below screenshot why is converted data sample still showing fields from my ingested data, shouldn’t it be the fields from converter config ?

What am I doing wrong here ?