Hi community,

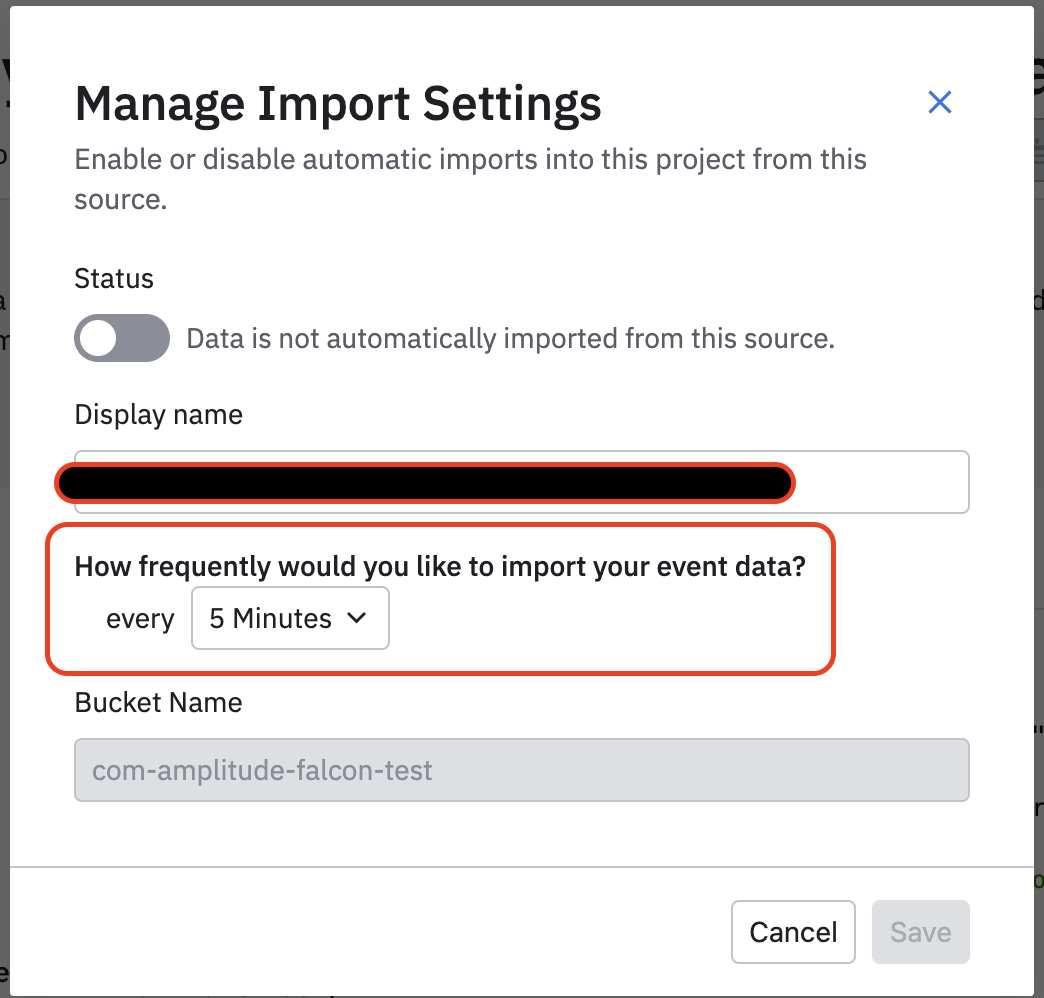

I plan to utilize the BigQuery Import feature to populate data as part of a conversion from GA universal to Amplitude. The feature is in beta and I have a few questions I thought best to post here.

- This process requires I set up a GCS storage bucket, but there is no discussion about size constraints. Are there any? I’m moving a few year’s worth of data!

- Is it best to break up your historic session tables into monthly temp tables first (which also gives me the option to remove unnecessary events from sessions if I choose)?

- Using the “Time-based” import, if I run multiple imports by monthly tables as mentioned above, do I assume the data is simply additive by date and does not replace other data with each import? Do I need to go from oldest date to newest?

I also see “SQL query” listed in the documentation -- am I designing the SQL to match up to a known schema or does the import assume the UA (or GA4) schema as part of the design of this process?

Many thanks for answers/thoughts/ideas.